安装JDK8

PPA方式安装OracleJDK

1 | sudo apt-add-repository ppa:webupd8team/java |

或安装OpenJDK

1 | sudo apt-get install default-jdk |

创建hadoop用户

1 | sudo useradd -m hadoop -s /bin/bash |

安装Open SSH Server

1 | sudo apt-get install openssh-server |

SSH授权:

1 | cd ~/.ssh/ |

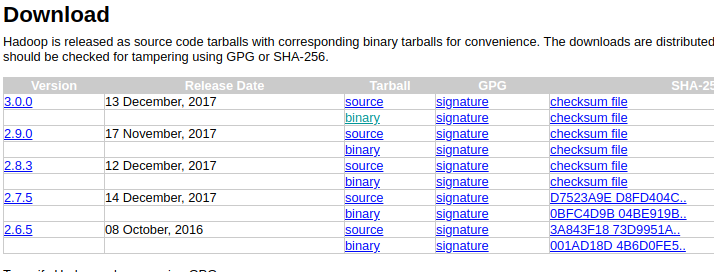

下载Hadoop

http://hadoop.apache.org/releases.html

选择3.0稳定版的binary下载,并解压

安装Hadoop

1 | tar -xzvf hadoop-3.0.0.tar.gz |

PATH

export PATH=$PATH:/opt/hadoop/sbin:/opt/hadoop/bin

设置JDK环境变量

readlink -f /usr/bin/java | sed “s:bin/java::”

/usr/lib/jvm/java-8-oracle/jre/

sudo vi ./etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-8-oracle/jre/

运行Hadoop

./bin/hadoop

mkdir ~/input

cp /opt/hadoop/etc/hadoop/*.xml ~/input

./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-3.0.0.jar grep ~/input ~/grep_example ‘principal[.]*’

伪分布式配置

1 | vi /opt/hadoop/etc/hadoop/core-site.xml |

1 | <configuration> |

1 | vi /opt/hadoop/etc/hadoop/hdfs-site.xml |

1 | <configuration> |

执行 NameNode 的格式化:

./bin/hdfs namenode -format

开启 NameNode 和 DataNode 守护进程:

./sbin/start-dfs.sh

./sbin/stop-dfs.sh

可以执行jps查看进程

WEB控制台界面:

http://localhost:9870

运行Hadoop伪分布式实例

在 HDFS 中创建用户目录:

./bin/hdfs dfs -mkdir -p /user/hadoop

将示例xml文件作为输入文件复制到分布式文件系统中

./bin/hdfs dfs -mkdir input

./bin/hdfs dfs -put /opt/hadoop/etc/hadoop/*.xml input

查看文件列表:

./bin/hdfs dfs -ls input

伪分布式运行 MapReduce 作业:

./bin/hadoop jar /opt/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-*.jar grep input output ‘dfs[a-z.]+’

查看运行结果:

./bin/hdfs dfs -cat output/*

将文件取回本地:

./bin/hdfs dfs -get output /opt/hadoop/output

启动YARN

vi mapred-site.xml

1 | <configuration> |

vi yarn-site.xml

1 | <configuration> |

启动YARN:

1 | ./sbin/start-yarn.sh # 启动YARN |

停止YARN:

1 | ./sbin/stop-yarn.sh |

参考文章

https://www.digitalocean.com/community/tutorials/how-to-install-hadoop-in-stand-alone-mode-on-ubuntu-16-04

http://www.powerxing.com/install-hadoop/

http://www.powerxing.com/hadoop-build-project-using-eclipse/